Top 50+ Database Engineer Interview Questions

Preparing for a technical interview? Whether you’re a beginner or an experienced professional, reviewing the most common Database Engineer Interview Questions can help you feel more confident and ready. In this guide, we’ve compiled over 40 essential questions that cover everything from database design and SQL to performance tuning and security. Let’s dive in and get you interview-ready!

What Does a Data Engineer Do?

A Data Engineer is responsible for designing, building, and maintaining the systems and architecture that enable organisations to collect, store, and analyse data efficiently. They create pipelines that move data from various sources into a usable format for analysts and data scientists. From setting up databases and ensuring data quality to managing large-scale data processing systems, a data engineer plays a crucial role in turning raw data into actionable insights.

50 Must-Know Interview Questions for Junior Data Engineers

Introductory Phase (About You)

1. Can you tell us about yourself?

2. Why did you choose a career in data engineering?

3. What excites you most about this role?

4. What are your strengths as an engineer?

5. What do you enjoy doing outside of work or studies?

Background and Experience

1. Have you worked on any data-related projects? Can you describe one?

2. What tools and technologies have you used for data engineering?

3. Have you ever worked with large datasets? What challenges did you face?

4. What is the most successful project you’ve worked on, and why?

5. How do you stay updated with trends in data engineering?

Technical/Tools Expertise (Role-Specific Skills)

1. What do you know about data pipelines?

2. What is ETL, and how does it differ from ELT?

3. What experience do you have with SQL?

4. Have you worked with any programming languages for data engineering?

5. What is your experience with cloud platforms?

Behavioral and Situational Questions

1. How do you handle tight deadlines for data projects?

2. What would you do if a data pipeline failed in production?

3. Describe a time when you worked with a team to deliver a project.

4. How do you handle feedback or criticism on your work?

5. What would you do if a data model you built didn’t meet user requirements?

6. Have you ever had to learn a new tool or technology quickly? How did you manage it?

7. What would you do if a client requested real-time data access instead of batch processing?

Critical Thinking

1. How would you design a data pipeline for a retail company?

2. What steps would you take to debug a slow-running query?

3. How do you prioritize tasks when working on multiple projects?

4. What would you do if your data ingestion process was missing records?

5. How would you decide whether to use a relational or non-relational database for a project

Performance-Based Questions

1. Can you write a SQL query to find duplicate records in a table?

2. Can you design a basic data pipeline using an ETL tool or script?

3. How would you optimize a data pipeline to handle increasing data volume?

4. Can you set up a data warehouse schema for reporting?

5. What steps would you take to create a real-time data streaming solution?

Problem-Solving and Analytical Thinking

1. What would you do if a database query took too long to execute?

2. How would you reduce wasted spend in a PPC campaign?

3. What steps would you take to fix a corrupted data file?

4. How do you ensure scalability in your data engineering solutions?

5. What would you do if your data pipeline introduced duplicate records?

Career Growth

1. What do you hope to achieve in this role?

2. Where do you see yourself in 3-5 years?

3. What kind of campaigns inspire you?

4. Are you interested in learning more about other areas of digital marketing?

5. What motivates you to grow as a designer?

Introductory Phase (About You)

This section of the Data Engineer Interview Questions focuses on your background, motivations, and personality. Interviewers ask these questions to get to know you better and understand why you’re interested in data engineering. It’s your chance to make a strong first impression by sharing your journey, interests, and how your experiences align with the role.

1. Can you tell us about yourself?

What Interviewers Want:

A concise overview of your background, interest in data engineering, and any relevant experience or skills.

Strong Answer:

“I have a degree in Computer Science and recently completed a certification in data engineering. During my coursework, I worked on building ETL pipelines and gained experience with tools like Python, SQL, and Apache Spark. I’m passionate about creating efficient data workflows that enable businesses to make informed decisions.”

Poor Answer:

“I studied Computer Science and learned tools like Python and SQL. I’m looking to start my career in data engineering.”

2. Why did you choose a career in data engineering?

What Interviewers Want:

A clear explanation of your passion for working with data, solving complex problems, and creating efficient systems.

Strong Answer:

“I enjoy working with data and solving complex problems to enable actionable insights. Data engineering combines my interests in coding, data analysis, and building scalable systems. I find it rewarding to create systems that ensure data is reliable and accessible for decision-making.”

Poor Answer:

“I like working with data and coding, so data engineering seemed like a good fit for me.”

3. What excites you most about this role?

What Interviewers Want:

Enthusiasm for working with data pipelines, tools, or contributing to the company’s data-driven decisions.

Strong Answer:

“I’m excited about the opportunity to work with large-scale data pipelines and contribute to the company’s data-driven strategies. I admire how your team uses modern tools and technologies like Spark and Kafka, and I’m eager to learn from the experienced team while applying my skills to meaningful projects.”

Poor Answer:

“I’m excited to work on data pipelines and learn more about tools and technologies.”

4. What are your strengths as an engineer?

What Interviewers Want:

Confidence in skills like problem-solving, analytical thinking, and attention to detail.

Strong Answer:

“My strengths include strong problem-solving skills, attention to detail, and a solid foundation in Python and SQL. I’m also a quick learner, which helps me adapt to new tools and technologies efficiently. For example, I recently learned Apache Airflow to automate workflows in one of my projects.”

Poor Answer:

“My strengths are problem-solving, attention to detail, and proficiency in tools like Python and SQL.”

5. What do you enjoy doing outside of work or studies?

What Interviewers Want:

Insight into hobbies or activities that reflect curiosity, technical skills, or problem-solving.

Strong Answer:

“I enjoy working on personal coding projects, such as automating data collection from APIs to create dashboards. I also like solving puzzles and logic games, which help improve my analytical thinking. Additionally, I stay updated on the latest trends in data engineering by following tech blogs and participating in online communities.”

Poor Answer:

“I like coding, solving puzzles, and reading about data engineering trends.”

Background and Experience

In this section of Data Engineer Interview Questions, interviewers aim to understand your hands-on experience, technical exposure, and how you’ve applied your skills in real-world scenarios. Whether it’s through academic projects, internships, or self-initiated work, sharing specific examples will help demonstrate your capability and growth as a future data engineer.

1. Have you worked on any data-related projects? Can you describe one?

What Interviewers Want:

Examples of projects showcasing experience in building or managing data pipelines, even academic or personal projects.

Strong Answer:

“Yes, I worked on a project to build an ETL pipeline for a retail dataset. I used Python to extract data from an API, transformed it using Pandas to clean and standardize the data, and loaded it into a PostgreSQL database. I automated the pipeline using Apache Airflow, which improved data processing efficiency by 30% compared to manual workflows.”

Poor Answer:

“I built an ETL pipeline using Python and SQL to process retail data and store it in a database.”

2. What tools and technologies have you used for data engineering?

What Interviewers Want:

Familiarity with tools like SQL, Python, Spark, or cloud platforms like AWS, Azure, or GCP.

Strong Answer:

“I’ve worked with Python for data manipulation and automation, SQL for querying and managing databases, and Apache Spark for processing large datasets. I’ve also used AWS S3 for storage and Lambda for serverless functions. For workflow orchestration, I’ve recently started learning Apache Airflow.”

Poor Answer:

“I’ve used Python, SQL, and tools like Spark and AWS for data engineering tasks.”

3. Have you ever worked with large datasets? What challenges did you face?

What Interviewers Want:

Problem-solving skills and ability to handle scalability issues.

Strong Answer:

“Yes, I worked with a dataset containing millions of rows for a financial analysis project. One challenge was optimizing SQL queries to reduce execution time. I resolved this by indexing frequently queried columns and breaking the dataset into smaller partitions for parallel processing using Spark. This reduced processing time significantly.”

Poor Answer:

“I’ve worked with large datasets and used indexing and Spark to process them efficiently.”

4. What is the most successful project you’ve worked on, and why?

What Interviewers Want:

Passion for a project and the ability to explain the technical challenges and solutions.

Strong Answer:

“My most successful project was designing a data pipeline for analyzing e-commerce trends. By integrating data from multiple sources using Python and cleaning it with Spark, I created a real-time dashboard in Tableau. The project was successful because it reduced reporting time by 40% and provided actionable insights for stakeholders.”

Poor Answer:

“I built a data pipeline for e-commerce analysis that helped stakeholders get insights faster.”

5. How do you stay updated with trends in data engineering?

What Interviewers Want:

Commitment to continuous learning through blogs, courses, or industry events.

Strong Answer:

“I follow industry blogs like Towards Data Science and Data Engineering Weekly. I also take courses on platforms like Coursera and attend webinars on emerging tools and technologies. Additionally, I participate in online communities like Reddit and LinkedIn groups to learn from industry professionals.”

Poor Answer:

“I read blogs and take online courses to stay updated on the latest data engineering trends.”

Technical/Tools Expertise (Role-Specific Skills)

This part of the Data Engineer Interview Questions focuses on your technical knowledge and hands-on experience with tools and technologies essential for data engineering. Interviewers assess your understanding of data pipelines, programming languages, databases, and cloud platforms to ensure you have the skills needed to build and maintain efficient data systems.

1. What do you know about data pipelines?

What Interviewers Want:

Understanding of data ingestion, processing, and storage workflows.

Strong Answer:

“A data pipeline is a series of processes used to collect, transform, and store data from various sources to a destination, like a data warehouse or a database. It involves data ingestion, cleaning, transformation, and loading. Tools like Apache Airflow, Spark, and Kafka are commonly used to automate and optimize these workflows.”

Poor Answer:

“A data pipeline collects, processes, and stores data for analysis.”

2. What is ETL, and how does it differ from ELT?

What Interviewers Want:

Awareness of data transformation methods and their use cases.

Strong Answer:

“ETL stands for Extract, Transform, Load, where data is transformed before being loaded into the destination system. ELT, on the other hand, stands for Extract, Load, Transform, where raw data is loaded into the destination and transformed later, often within the data warehouse. ELT is more suitable for large-scale data processing in modern cloud environments.”

Poor Answer:

“ETL transforms data before loading, while ELT loads raw data and transforms it later.”

3. What experience do you have with SQL?

What Interviewers Want:

Proficiency in writing queries, creating joins, and optimizing database performance.

Strong Answer:

“I’m proficient in SQL and have used it extensively for querying, data transformation, and performance optimization. I’ve written complex queries involving joins, subqueries, and window functions. For example, I recently optimized a slow query by indexing and restructuring the database schema, which improved execution time by 50%.”

Poor Answer:

“I’m experienced in SQL and have used it to write queries and join tables for data analysis.”

4. Have you worked with any programming languages for data engineering?

What Interviewers Want:

Familiarity with Python, Scala, or Java for data manipulation and pipeline building.

Strong Answer:

“I have extensive experience with Python, using libraries like Pandas and PySpark for data processing. I’ve also used Python to build ETL pipelines and automate workflows. Recently, I started learning Scala to work with Apache Spark more effectively.”

Poor Answer:

“I’ve used Python for data processing and started learning Scala for Spark.”

5. What is your experience with cloud platforms?

What Interviewers Want:

Knowledge of cloud services like AWS S3, GCP BigQuery, or Azure Data Factory.

Strong Answer:

“I’ve worked with AWS, using S3 for data storage, Lambda for serverless computing, and Redshift for analytics. I’m also familiar with GCP services like BigQuery for querying large datasets and Dataflow for stream processing. I’ve deployed data pipelines and monitored performance using these platforms.”

Poor Answer:

“I’ve used AWS and GCP for data storage and analysis tasks.”

Behavioral and Situational Questions

This section of the Data Engineer Interview Questions focuses on behavioural and situational questions. These are designed to assess how you’ve handled real-world situations in the past or how you would respond to challenges as a Data Engineer. Employers use these questions to evaluate your problem-solving skills, communication, teamwork, adaptability, and decision-making in a professional setting. Prepare to answer using examples that reflect your experience, values, and approach to work.

1. How do you handle tight deadlines for data projects?

What Interviewers Want:

Time management and prioritization skills.

Strong Answer:

“I prioritize tasks by identifying the most critical components that directly impact the project’s goals. I communicate with the team to ensure everyone is aligned on the deliverables and timelines. If needed, I simplify processes, such as using pre-built tools or scripts to save time, while ensuring the project’s quality isn’t compromised.”

Poor Answer:

“I focus on the most important tasks and work quickly to meet the deadline.”

2. What would you do if a data pipeline failed in production?

What Interviewers Want:

Problem-solving skills and ability to troubleshoot under pressure.

Strong Answer:

“I’d immediately check the logs to identify the error and determine its impact. If the issue is urgent, I’d implement a temporary fix to restore functionality while investigating the root cause. Once resolved, I’d document the issue and update monitoring or alerting systems to prevent similar failures.”

Poor Answer:

“I’d check the logs to fix the issue and make sure it doesn’t happen again.”

3. Describe a time when you worked with a team to deliver a project.

What Interviewers Want:

Collaboration skills and your role in ensuring the project’s success.

Strong Answer:

“In a group project to build a data warehouse, I collaborated with teammates to integrate multiple data sources. My role was to design the ETL pipeline and ensure data consistency. We held regular check-ins to track progress and address challenges. By working together, we delivered a scalable solution that met the client’s needs.”

Poor Answer:

“I worked with a team to build a data warehouse and ensured everything worked correctly.”

4. How do you handle feedback or criticism on your work?

What Interviewers Want:

Openness to constructive criticism and a willingness to improve.

Strong Answer:

“I view feedback as an opportunity to improve. For example, when a colleague pointed out inefficiencies in a query I wrote, I reviewed their suggestions, optimized the query, and learned a new technique for future use. Feedback helps me grow and deliver better results.”

Poor Answer:

“I listen to feedback and make changes to improve my work.”

5. What would you do if a data model you built didn’t meet user requirements?

What Interviewers Want:

Adaptability to revise and improve solutions based on feedback.

Strong Answer:

“I’d meet with the users to understand their concerns and clarify their needs. Then, I’d revise the model, ensuring it aligns with their expectations while maintaining data integrity. For instance, I once adjusted a reporting model by adding new fields after realizing they were critical for the users’ analysis.”

Poor Answer:

“I’d review the user feedback and make changes to the data model.”

6. Have you ever had to learn a new tool or technology quickly? How did you manage it?

What Interviewers Want:

Adaptability and eagerness to learn.

Strong Answer:

“Yes, I recently needed to learn Apache Airflow for a project. I started with online tutorials and documentation to understand its workflow orchestration capabilities. Then, I set up a small test pipeline to apply my learning. Within a week, I felt confident enough to use it in a production environment.”

Poor Answer:

“I learned Apache Airflow by watching tutorials and practicing with small projects.”

7. What would you do if a client requested real-time data access instead of batch processing?

What Interviewers Want:

Awareness of streaming technologies and ability to propose solutions.

Strong Answer:

“I’d discuss the specific requirements with the client, such as latency and data volume. Then, I’d evaluate streaming technologies like Apache Kafka or AWS Kinesis to implement a real-time solution. For example, I’d set up a Kafka pipeline to ingest, process, and deliver data in real-time while ensuring scalability and reliability.”

Poor Answer:

“I’d explore tools like Kafka to provide real-time data access and meet the client’s needs.”

Critical Thinking

Critical thinking is an essential skill for any data engineer. It involves analyzing data problems logically, evaluating multiple solutions, and making sound decisions based on evidence. In Data Engineer Interview Questions, employers often test your ability to think critically because data engineers need to solve complex problems, troubleshoot pipelines, and ensure data accuracy efficiently. Demonstrating strong critical thinking skills shows that you can handle challenges thoughtfully and deliver reliable data solutions.

1. How would you design a data pipeline for a retail company?

What Interviewers Want:

Logical thinking, creativity, and focus on scalability and efficiency.

Strong Answer:

“I’d start by understanding the company’s data sources, such as point-of-sale systems, inventory databases, and e-commerce platforms. I’d design an ETL pipeline to extract data from these sources, transform it to ensure consistency and accuracy, and load it into a data warehouse like Redshift or BigQuery. The pipeline would include monitoring and logging to ensure data quality and scalability as the company grows. Tools like Apache Airflow could be used to orchestrate the workflow.”

Poor Answer:

“I’d extract data, clean it, and load it into a database or data warehouse for analysis.”

2. What steps would you take to debug a slow-running query?

What Interviewers Want:

A structured approach to identifying and resolving performance bottlenecks.

Strong Answer:

“I’d start by analyzing the query execution plan to identify bottlenecks like table scans or missing indexes. Next, I’d optimize the query by adding indexes, rewriting joins, or using partitioning to reduce the data scanned. If the issue persists, I’d review the database schema and consider restructuring it to improve performance.”

Poor Answer:

“I’d check the query execution plan and add indexes or optimize joins to make it faster.”

3. How do you prioritize tasks when working on multiple projects?

What Interviewers Want:

Time management and organization skills.

Strong Answer:

“I prioritize tasks by assessing their urgency and impact on the overall goals. I use tools like Trello or Jira to track progress and break down tasks into manageable steps. For example, if two projects have similar deadlines, I’d focus on the one that has a higher business impact or dependencies to avoid delays.”

Poor Answer:

“I focus on urgent tasks first and manage time to meet deadlines for all projects.”

4. What would you do if your data ingestion process was missing records?

What Interviewers Want:

Problem-solving skills to investigate and fix the issue.

Strong Answer:

“I’d start by reviewing the logs to identify where the data loss occurred. Then, I’d verify the source system for issues like connectivity problems or incomplete exports. If necessary, I’d rerun the process for the missing data and implement validation checks to ensure all records are captured in future runs.”

Poor Answer:

“I’d check the logs and source system to fix the issue and reprocess the missing data.”

5. How would you decide whether to use a relational or non-relational database for a project?

What Interviewers Want:

Understanding of trade-offs based on data type, scalability, and query requirements.

Strong Answer:

“I’d assess the data structure and query requirements. If the data is structured and requires complex joins or ACID compliance, I’d choose a relational database like PostgreSQL. For unstructured or semi-structured data, such as JSON or documents, or when scalability is a priority, I’d opt for a non-relational database like MongoDB or Cassandra.”

Poor Answer:

“I’d choose a relational database for structured data and a non-relational one for unstructured data.”

Performance-Based Questions

In Data Engineer Interview Questions, performance-based questions are designed to evaluate your practical skills and how you handle real-world data engineering tasks. These questions often focus on your ability to design efficient data pipelines, optimize queries, and troubleshoot performance issues. Demonstrating your hands-on experience and problem-solving abilities helps interviewers assess how well you can deliver results under pressure.

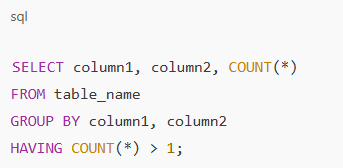

1. Can you write a SQL query to find duplicate records in a table?

What Interviewers Want:

Practical knowledge of SQL and data analysis.

2. Can you design a basic data pipeline using an ETL tool or script?

What Interviewers Want:

Ability to demonstrate an understanding of pipeline workflows.

Strong Answer:

“I’d use Python to extract data from an API, transform it with Pandas to clean and standardize the data, and load it into a PostgreSQL database. The workflow would be automated using Apache Airflow to schedule the job, with logging and alerting for error tracking.”

Poor Answer:

“I’d use Python to extract, clean, and load data into a database, automating the process with Airflow.”

3. How would you optimize a data pipeline to handle increasing data volume?

What Interviewers Want:

Knowledge of scaling strategies like partitioning, parallelism, or distributed systems.

Strong Answer:

“I’d implement partitioning to process smaller chunks of data and use parallel processing to speed up workflows. For storage, I’d switch to a distributed system like HDFS or S3. I’d also monitor performance metrics and cache intermediate results to avoid redundant computations.”

Poor Answer:

“I’d use partitioning and parallel processing to handle larger data volumes.”

4. Can you set up a data warehouse schema for reporting?

What Interviewers Want:

Understanding of designing data structures for analytics.

Strong Answer:

“I’d use a star schema for reporting, with fact tables containing transaction details and dimension tables for descriptive attributes like product, customer, and time. This structure simplifies querying and improves performance for analytics. For example, I’d design a sales fact table linked to dimensions for products, customers, and dates.”

Poor Answer:

“I’d create a star schema with fact and dimension tables to make reporting easier.”

5. What steps would you take to create a real-time data streaming solution?

What Interviewers Want:

Awareness of streaming tools and their implementation.

Strong Answer:

“I’d use Apache Kafka to collect and publish real-time events. The data would be processed using tools like Apache Flink or Spark Streaming and stored in a low-latency database like Cassandra or Elasticsearch. To monitor the pipeline, I’d set up dashboards using tools like Grafana.”

Poor Answer:

“I’d use Kafka for real-time data collection and Spark for processing.”

Problem-Solving and Analytical Thinking

“I’d use Apache Kafka to collect and publish real-time events. The data would be processed using tools like Apache Flink or Spark Streaming and stored in a low-latency database like Cassandra or Elasticsearch. To monitor the pipeline, I’d set up dashboards using tools like Grafana.”

1. What would you do if a database query took too long to execute?

Strong Answer:

“I’d start by analyzing the query execution plan to identify bottlenecks, such as full table scans or inefficient joins. Then, I’d optimize the query by adding appropriate indexes, rewriting joins, or breaking the query into smaller parts. If the issue persists, I’d evaluate the database schema and consider partitioning or denormalization for better performance.”

Poor Answer:

“I’d check the query for inefficiencies and add indexes to speed it up.”

2. How would you reduce wasted spend in a PPC campaign?

Strong Answer:

“I’d implement validation checks at each stage of the data pipeline to ensure consistency, such as checksums or hash comparisons. I’d also use logging to track data transformations and reconciliation scripts to compare data across systems, ensuring no discrepancies.”

Poor Answer:

“I’d use validation checks and logging to ensure data is consistent.”

3. What steps would you take to fix a corrupted data file?

Strong Answer:

“I’d first isolate the corrupted file and analyze logs to identify the cause. If there’s a backup available, I’d restore the data from there. If not, I’d attempt to clean and repair the file using tools like Pandas or custom scripts. I’d also implement checks to prevent future corruption, such as validation during ingestion.”

Poor Answer:

“I’d try to restore the data from a backup or clean the corrupted file manually.”

4. How do you ensure scalability in your data engineering solutions?

Strong Answer:

“I design pipelines using distributed systems like Apache Spark or Hadoop to handle increasing data volume. I use partitioning and parallelism to process data efficiently and deploy solutions on scalable cloud platforms like AWS or GCP. Monitoring tools are set up to identify and address performance bottlenecks as the system grows.”

Poor Answer:

“I use distributed systems like Spark and scalable cloud platforms to handle large datasets.”

5. What would you do if your data pipeline introduced duplicate records?

Strong Answer:

“I’d first identify the step in the pipeline where duplicates are introduced by analyzing logs and tracing the data flow. Then, I’d add a deduplication step, such as using SQL’s DISTINCT clause or filtering with unique IDs during transformation. I’d also implement validation checks to prevent duplicates in future runs.”

Poor Answer:

“I’d check the logs, find the issue, and remove duplicates using SQL or other tools.”

6. How would you approach integrating data from multiple sources?

Strong Answer:

“I’d start by understanding the schema and structure of each source. Then, I’d clean and normalize the data to ensure consistency. Using a tool like Apache NiFi or Python, I’d map schemas and transform the data into a unified format before loading it into a central repository for analysis.”

Poor Answer:

“I’d clean the data and map it to a common format for integration.”

7. What would you do if a client needed real-time insights but your system only supported batch processing?

Strong Answer:

“I’d propose setting up a streaming solution, like Apache Kafka or AWS Kinesis, for real-time ingestion and processing while keeping the batch pipeline for historical analysis. As an interim solution, I’d increase the frequency of batch processing to provide near-real-time updates while working on implementing the streaming architecture.”

Poor Answer:

“I’d set up a streaming tool like Kafka to provide real-time insights for the client.”

8. How do you prioritize data quality versus speed in a project?

What Interviewers Want:

Knowledge of mobile-friendly ads, responsive landing pages, and targeting mobile devices.

Strong Answer:

“I prioritize based on the project’s goals. If the focus is on analytics or reporting, data quality takes precedence to ensure accuracy. For real-time monitoring, I balance speed with quality by validating critical data points while deferring non-essential validations to maintain performance.”

Poor Answer:

“I decide based on the project’s goals, focusing on quality for analytics and speed for real-time needs.”

9. What steps would you take to troubleshoot a broken data pipeline?

What Interviewers Want:

Ability to refine targeting to balance reach and relevance.

Strong Answer:

“I’d explain the importance of balancing reach and relevance to maximize ROI. I’d recommend segmenting the audience into smaller, more targeted groups and creating tailored ads for each segment. If broad targeting is essential, I’d use detailed exclusions and negative keywords to refine the audience.”

Poor Answer:

“I’d try to target a broad audience while adding filters to make it more relevant.”

10. How do you handle seasonal fluctuations in campaign performance?

What Interviewers Want:

Awareness of adjusting budgets, bids, and targeting for seasonality.

Strong Answer:

“I’d analyze historical data to anticipate seasonal trends and adjust budgets and bids accordingly. For example, I’d increase spending during peak seasons and focus on high-performing keywords. In the off-season, I’d shift the strategy to brand awareness or audience building to maintain visibility.”

Poor Answer:

“I’d increase the budget during peak seasons and lower it during slower times.”

Career Growth

In Data Engineer Interview Questions, problem-solving and analytical thinking are crucial skills. These questions assess your ability to break down complex data challenges, identify patterns, and develop effective solutions. Interviewers look for candidates who can think logically, analyze data thoroughly, and approach problems methodically to ensure accurate and efficient data processing.

1. What do you hope to achieve in this role?

What Interviewers Want:

A clear desire to grow in PPC advertising and contribute to team success.

Strong Answer:

“I hope to deepen my expertise in PPC advertising by managing campaigns across different industries and learning from the experienced team here. I aim to improve my skills in strategy development, data analysis, and campaign optimization while contributing to the success of the company’s advertising efforts.”

Poor Answer:

“I want to learn more about PPC and work on campaigns to gain experience.”

2. Where do you see yourself in 3-5 years?

What Interviewers Want:

A vision of long-term growth in PPC or broader digital marketing roles.

Strong Answer:

“In 3-5 years, I see myself as a senior PPC specialist or digital marketing manager, leading campaigns and mentoring junior team members. I’d like to expand my expertise to include multi-channel marketing strategies and play a key role in driving measurable results for the business.”

Poor Answer:

“I want to be in a senior role where I can manage campaigns and help the team.”

3. What kind of campaigns inspire you?

What Interviewers Want:

Passion for creating impactful and innovative advertising campaigns.

Strong Answer:

“I’m inspired by campaigns that combine creativity with data-driven strategies to deliver exceptional results. For example, I admire how brands use storytelling in video ads or remarketing campaigns to engage users and boost conversions. Campaigns that focus on personalization and user experience are particularly exciting to me.”

Poor Answer:

“I enjoy creative campaigns that get results and engage users effectively.”

4. Are you interested in learning more about other areas of digital marketing?

What Interviewers Want:

Interest in expanding skills and cross-functional collaboration.

Strong Answer:

“Absolutely. I’m particularly interested in SEO and content marketing, as they complement PPC strategies by improving organic visibility and user engagement. Learning more about these areas would help me develop integrated campaigns and collaborate effectively with other teams.”

Poor Answer:

“Yes, I’d like to learn more about areas like SEO or social media to improve my skills.”

5. What motivates you to grow as a designer?

What Interviewers Want:

A passion for delivering results and mastering the craft of paid advertising.

Strong Answer:

“I’m motivated by the challenge of driving measurable results and continuously improving campaign performance. I enjoy analyzing data to uncover insights and testing new strategies to maximize ROI. Seeing the tangible impact of my work on a client’s success keeps me excited to grow in this field.”

Poor Answer:

“I’m motivated by the results I can achieve in campaigns and by learning new strategies.”

Questions to ask interviewer

- What tools and platforms will I use in this role?

- What kind of campaigns will I work on?

- What opportunities are there for mentorship or growth?

- How does the PPC team collaborate with other teams here?

- What are the next steps in the hiring process?

Top 50+ Database Engineer Interview Questions

Table of Contents

Recommended Resources

30 QA Interview Questions and Answers for 2025

Machine Learning Engineer Interview Questions

IT Support Interview Questions

35 Most Common Business Development Interview Questions

Digital Marketing Specialist Interview Questions

Top 60 Azure Data Engineer Interview Questions